This is taking more time that I thought it would, as usual. Being my arrogant self I thought I had covered a lot of the material but there are a bunch of detail gaps to fill. I have the most trouble with authentication and encryption protocols and given that I won’t see any direct questions from this VCE I’m going to have to really nail those down. The other thing is ports and those can be a bit mysterious at times because A isn’t always to B, if that makes sense. So, I need to really spend time on those and figure them out. It’s interesting to learn things and I find it useful and helpful in all sorts of scenarios where logic plays a factor even if the studied subjects don’t obviously correlate. Also, the word wrap feature in CoffeeCup doesn’t seem to scale with window size or it doesn’t do anything haha

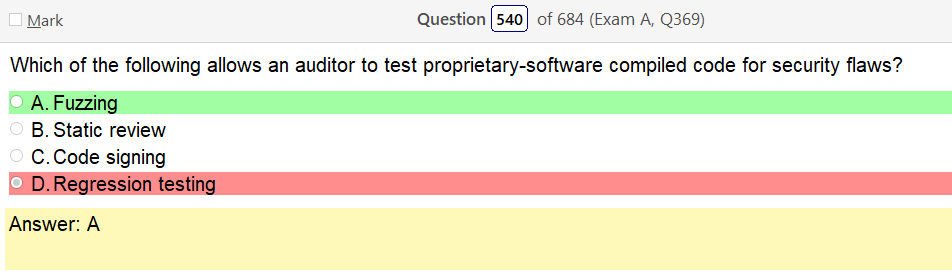

So this one seems a little grey IMO as fuzzing is input validation and this is discussing ‘compiled code’ which may or may not have user input. Compiled code could run any thing, it doesn’t have to connect to a database that you could run cmds against.

Compiled code is a set of files that must be linked together and with one master list of steps in order for it to run as a program. This is opposed to a interpreted code like web scripts, host server scripts and BASIC that are run one line at a time. Another program called a compiler is designed to maximize the efficiency and speed of the program so that it runs faster than an interpreted version of the same program. A compiled program outputs an EXE or DLL file. A compiler also checks the code for errors. It will work up and down the code to scan for anything that will crash the program or is syntactically wrong. A program with untested code can do anything to your computer including very bad things if not contained within its space. Lots of time is spent by a programmer to get an error free compiled EXE file. (And even then you can get infinite loops, etc.) In the end the point of compiling is to create a most efficient, compact EXE file for optimal running of the program.

However when you google complied code fuzzing it points at this: Compiler Fuzzing: How Much Does It Matter? so I’m going to read through it. Quick tip, get good at reading fast, remembering what you read and recognizing the high lights for getting certs efficiently.

So, after reading this it seems like what they are pointing at is miss computation errors made by compilers. Fuzzing, as I understood it was more a mater of checking to see how code responds to things, now if you follow that around it turns out that what they mean is how the code responds to a specific set of instructions given by the user or machine that is interacting with the program and checking if the results are correct. Under this logic it absolutely makes more sense. However, does it make more sense than labeling it regression testing. I guess we should get a better idea of what that is because I thought that sort of was what regression testing was, does the application function as designed.

REGRESSION TESTING is defined as a type of software testing to confirm that a recent program or code change has not adversely affected existing features. Regression Testing is nothing but a full or partial selection of already executed test cases which are re-executed to ensure existing functionalities work fine. This testing is done to make sure that new code changes should not have side effects on the existing functionalities. It ensures that the old code still works once the latest code changes are done.

So this seems to indicate that regression testing does a similar thing but instead of being a new application its an application that is adding new functionality. In this case it would appear that there is no indicator that says the code is a new feature of an older application.

I’m going to define these, again.

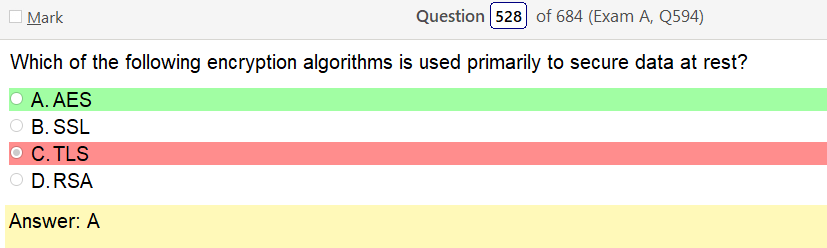

- AES – AES comprises three block ciphers: AES-128, AES-192 and AES-256. Each cipher encrypts and decrypts data in blocks of 128 bits using cryptographic keys of 128-, 192- and 256-bits, respectively.

- SSL – Transport Layer Security, and its now-deprecated predecessor, Secure Sockets Layer, are cryptographic protocols designed to provide communications security over a computer network. Several versions of the protocols find widespread use in applications such as web browsing, email, instant messaging, and voice over IP

- TLS – (the link is really good) Transport Layer Security, the more recent encryption protocol that has replaced SSL

- RSA – one of the first public-key cryptosystems and is widely used for secure data transmission. In such a cryptosystem, the encryption key is public and it is different from the decryption key which is kept secret.

In addition, Data At Rest wiki notes: Data encryption, which prevents data visibility in the event of its unauthorized access or theft, is commonly used to protect data in motion and increasingly promoted for protecting data at rest.[7] The encryption of data at rest should only include strong encryption methods such as AES or RSA. Encrypted data should remain encrypted when access controls such as usernames and password fail. Increasing encryption on multiple levels is recommended. Cryptography can be implemented on the database housing the data and on the physical storage where the databases are stored. Data encryption keys should be updated on a regular basis. Encryption keys should be stored separately from the data. Encryption also enables crypto-shredding at the end of the data or hardware lifecycle. Periodic auditing of sensitive data should be part of policy and should occur on scheduled occurrences. Finally, only store the minimum possible amount of sensitive data

Lots of useful information here but the answer was found in the Data at rest info.

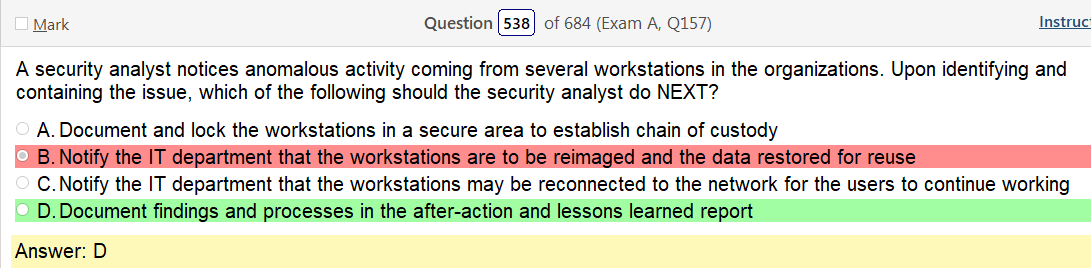

These types of questions seem subjective to me. I mean, if this ‘analyst’ isn’t in the security department he should let them know rather than attempt to fix it him self. The other way is also true. However, it does say security analyst and in this case it would appear that their department handles A-B remediation. Not sure if that’s always the case.

So, I have to admit, I did a lot of reading in the first half of this and was deliriously tired towards the end and realized that I should go back through the links in this one and the previous one. Which I will do tomorrow (possibly tonight) and possibly aim to get another one of these done. Anyway, back this and I’m going to make a list!

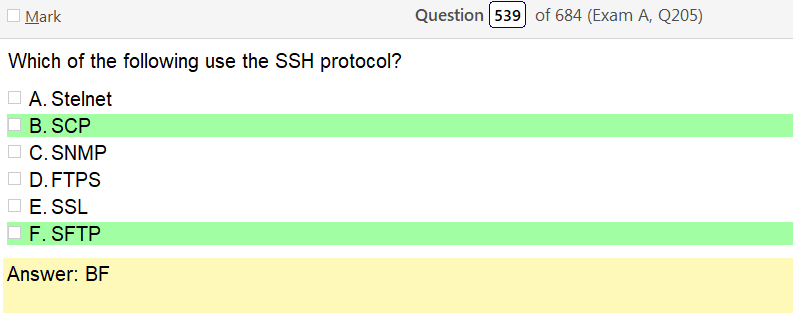

- STelnet – I’m pretty sure this is SSH but its not really clear through google

- SCP – is a means of securely transferring computer files between a local host and a remote host or between two remote hosts. It is based on the Secure Shell (SSH) protocol.[1] “SCP” commonly refers to both the Secure Copy Protocol and the program itself.[2] According to OpenSSH developers in April 2019 the scp protocol is outdated, inflexible and not readily fixed; they recommend the use of more modern protocols like sftp and rsync for file transfer. a network protocol, based on the BSD RCP protocol,[4] which supports file transfers between hosts on a network. SCP uses Secure Shell (SSH) for data transfer and uses the same mechanisms for authentication, thereby ensuring the authenticity and confidentiality of the data in transit. A client can send (upload) files to a server, optionally including their basic attributes (permissions, timestamps). Clients can also request files or directories from a server (download). SCP runs over TCP port 22 by default. Like RCP, there is no RFC that defines the specifics of the protocol.

- SNMP – an Internet Standard protocol for collecting and organizing information about managed devices on IP networks and for modifying that information to change device behavior. Devices that typically support SNMP include cable modems, routers, switches, servers, workstations, printers, and more.

- FTPS – FTPS (also known as FTPES, FTP-SSL, and FTP Secure) is an extension to the commonly used File Transfer Protocol (FTP) that adds support for the Transport Layer Security (TLS) and, formerly, the Secure Sockets Layer (SSL, which is now prohibited by RFC7568) cryptographic protocols

- SSL – Transport Layer Security (TLS), and its now-deprecated predecessor, Secure Sockets Layer (SSL),[1] are cryptographic protocols designed to provide communications security over a computer network.[2] Several versions of the protocols find widespread use in applications such as web browsing, email, instant messaging, and voice over IP (VoIP). Websites can use TLS to secure all communications between their servers and web browsers.

- SFTP – a command-line interface client program to transfer files using the SSH File Transfer Protocol (SFTP), which runs inside the encrypted Secure Shell connection.

The problem with this is that the answer is clear once you go through all of them finding a statement that says ‘implicitly uses x’

I dont know if I’ve said this before but let me be clear: I hate these things

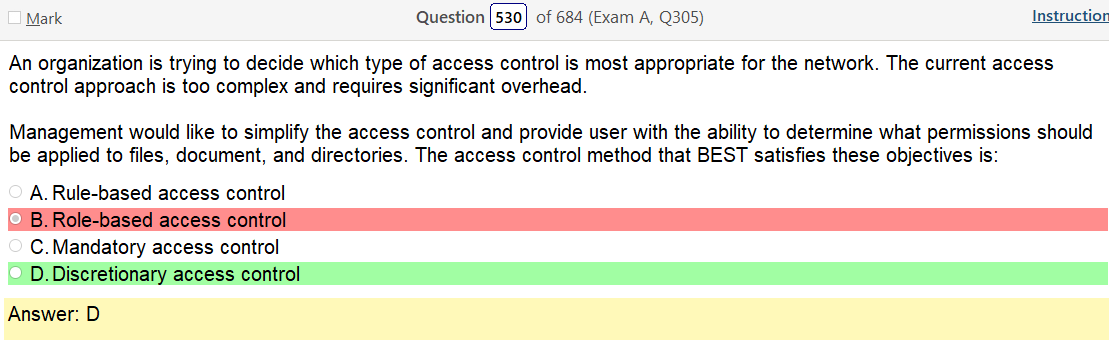

- Rule-based access control – With rule-based access control, when a request is made for access to a network or network resource, the controlling device, e.g. firewall, checks properties of the request against a set of rules. A rule might be to block an IP address, or a range of IP addresses. A rule might be to allow access to an IP address but block that IP address from use of a specific port, for example port 21 commonly used for FTP, or port 23 commonly used for Telnet. A rule might be to block a specific IP address, or block all IP addresses from accessing certain applications on the network, such as email or video steaming.

- Role-based access control – With role-based access control, when a request is made for access to a network or network resource, the controlling device allows or blocks access to a network or network resource based on that user’s role in the organization. For example, an individual with the engineer role in an organization might be allowed access to the specifications of parts used in the company’s product, but blocked access to employee records. An individual with the supervisor role might be allowed access to employee records, but blocked access to engineering documents and specifications.

- Mandatory access control – Often employed in government and military facilities, mandatory access control works by assigning a classification label to each file system object. Classifications include confidential, secret and top secret. Each user and device on the system is assigned a similar classification and clearance level. When a person or device tries to access a specific resource, the OS or security kernel will check the entity’s credentials to determine whether access will be granted. While it is the most secure access control setting available, MAC requires careful planning and continuous monitoring to keep all resource objects’ and users’ classifications up to date.

- Discretionary access control – type of security access control that grants or restricts object access via an access policy determined by an object’s owner group and/or subjects. DAC mechanism controls are defined by user identification with supplied credentials during authentication, such as username and password. DACs are discretionary because the subject (owner) can transfer authenticated objects or information access to other users. In other words, the owner determines object access privileges.

Ok, those are starting to get a little clearer after reading definitions that are not from wikipedia. Anyway, thats all for today.

Photo unrelated

Leave a comment